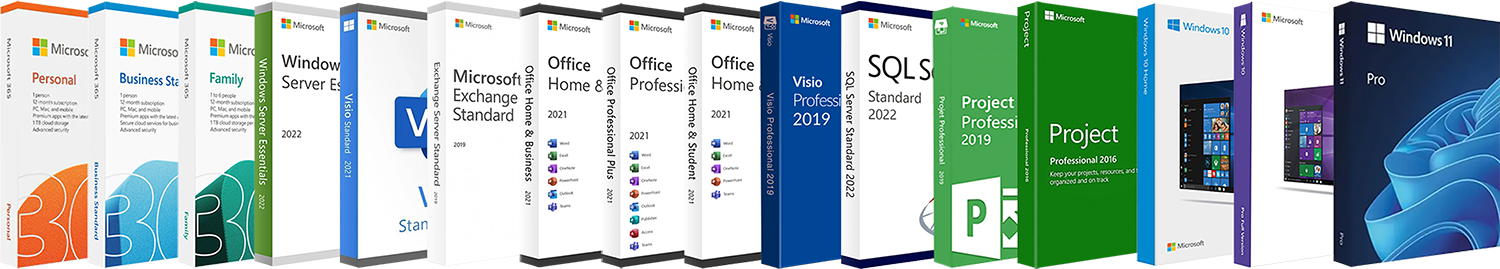

See our Microsoft

Product Offerings

Product Offerings

Product Categories

Warranty and Support

Give us a call or have a chat with our online representative.

Click Here

Business Customers

We have been serving the industry for 10 years now.

Click Here

Resellers

You can now easily checkout from website without any issue.

Click Here